Nvidia Makes Big Artificial Intelligence Play, Teams With AWS And Major Server Vendors

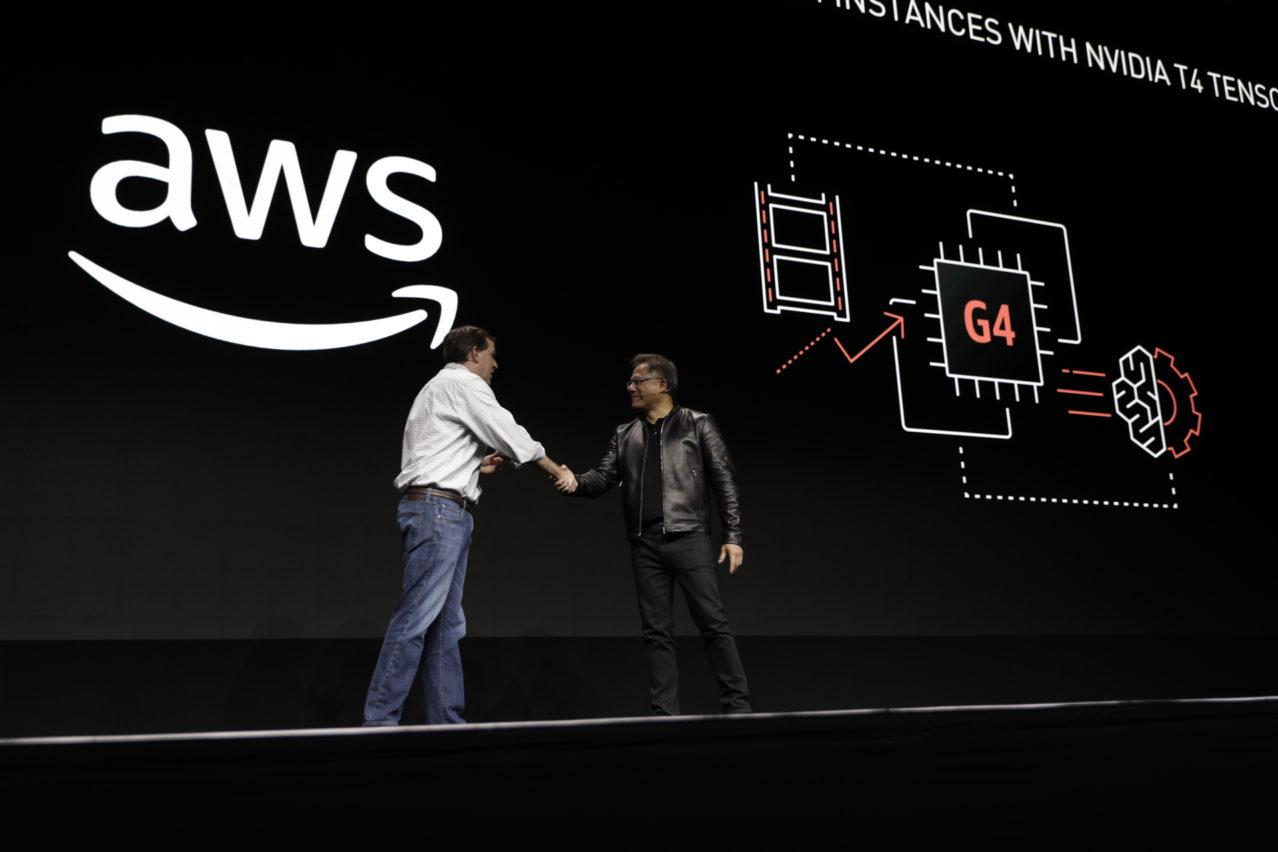

AWS' Matt Garman (left) and Nvidia's Jensen Huang

Nvidia is making a big artificial intelligence play with its Nvidia Turing T4 GPUs by enlisting some of the worlds top technology firms, including cloud giant Amazon Web Services and the biggest server vendors, to help bring the technology to market.

The partnerships reinforce Nvidia's own commitment to artificial intelligence not only in terms of its Turing T4 GPUs but also in its release of enhanced software aimed at data scientists who use machine learning and AI to help customers gain new insight into their data, said Jensen Huang, co-founder and CEO of the Santa Clara, Calif.-based vendor.

Huang, speaking during his Monday keynote at the Nvidia GPU Technology Conference, held this week in San Jose, Calif., told attendees that deep learning underlies artificial intelligence and is making data scientists the fastest-growing part of computer science.

[Related: Getting Smart: CRN's 2018 Artificial Intelligence Roundtable]

There are three factors impacting deep learning today, including the enormous amount of data from sensors and user input; breakthroughs in machine learning; and massive growth in compute capabilities, Huang said.

"[Deep learning] allows us to solve new problems," he said.

Taking advantage of deep learning is where artificial intelligence comes in, Huang said.

There are three platforms on which AI is built, he said, including workstations, servers and the cloud. On top of all of those is software, in particular the company's CUDA parallel computing platform and programming model, he said.

Starting off Nvidia's latest AI push is the introduction of its new CUDA-X AI libraries. CUDA-X AI brings together 15 Nvidia libraries for accelerating AI, said Ian Buck, vice president and general manager for accelerated computing at Nvidia.

This series of libraries includes applications that run on Nvidia Tensor Core GPUs, including the latest T4 Tensor Core GPU, as well as in hyper-scaler clouds, Buck said.

To meet the AI requirements of the largest number of potential users, Nvidia is now partnering with Amazon Web Services, Buck said.

Under that new relationship, Amazon has introduced a new EC2 G4 cloud instance based on the Nvidia T4 Tensor Core GPUs. That instance gives AWS customers a new cloud-based platform to deploy a wide range of AI services using Nvidia GPU acceleration software such as the Nvidia CUDA-X AI libraries to accelerate deep learning, machine learning and data analytics, he said.

T4 will also be supported by Amazon Elastic Container Service for Kubernetes to let customers use Kubernetes containers to deploy, manage and scale applications, he said.

Matt Garman, vice president of compute services for AWS, joined Huang on stage to introduce the partnership, and said that AWS provides the fastest way to offer machine-learning services, as customers can spin up an instance, do tests, make changes, and then get rid of it.

This is especially important as many users are still trying to understand how machine learning fits their requirements, Garman said. "The cloud is the perfect fit for machine learning," he said.

The new Nvidia CUDA-X AI acceleration libraries are also available now on Microsoft Azure. This includes the RAPIDS open-source suite of libraries targeted at using machine learning to create predictive AI models from data stored in the cloud.

Also new is a series of T4 GPU-based servers from most of the major server vendors, Huang said. Those servers, including models from Cisco Systems, Dell EMC, Fujitsu, Hewlett Packard Enterprise, Inspur, Lenovo and Sugon, can accelerate tasks that might take 35 minutes using standard CPUs so that they only take three minutes, according to Nvidia benchmarks, he said.

"That's barely enough time to get up and get a cup of coffee," he said. "You'll see data scientists being less caffeinated going forward."

The new servers fit into existing data center infrastructures to help accelerate AI training and inference, machine learning, data analytics, and virtual desktop infrastructure, Buck said.